MediaFlies

A Video and Audio Remixing Multi Agent

System

Dr. Daniel Bisig,

BSc, PhD.

Artificial Intelligence Laboratory,

University of Zurich, Switzerland.

e-mail:

dbisig@ifi.unizh.ch

Prof. Dr. Tatsuo

Unemi, MEng, DEng.

Department of

Information Systems Science, Soka University, Tokyo, Japan.

e-mail: unemi@iss.soka.ac.jp

Abstract

The project MediaFlies realizes

an interactive multi agent system, which remixes life and prerecorded audio and

video material. Agents engage in flocking and behavior synchronization and

thereby control the material's continuously changing fragmentation and

rearrangement. Visitors can influence the agents' behaviors via a video

tracking system and thus shift the ratio of disorder and recognizability in

MediaFlies acoustic and visual feedback.

1. Introduction

Research in the field of Artificial Life and Artificial

Intelligence forms an important conceptual and technical background for people

who produce algorithmic and generative art. In these fields, art and science

share a common interest in issues such as emergence, self-organization,

complexity, autonomy, adaptivity and diversity. Complex and self-organized

systems have a great appeal for art, since they possess the capability to

continuously change, adapt and evolve [1] A group of animals such as a flock of

birds constitutes an example of a self-organized system. Flocking algorithms,

which model these behaviors, are mostly based on the seminal work of Craig

Reynolds [2] Flocking algorithms give raise to lifelike and dynamic behaviors

that can easily be adapted to control a variety of visual or acoustic

parameters. In addition, interaction with a flocking based artwork often seems

very intuitive and natural. Examples of flocking based generative systems

include robotic works such as ”ARTsBot” [3], interactive musicians [4],

generative sound synthesis [5][6], virtual orchestras [7][8][9], and

interactive video installations such as “SwarmArt” [10] and “swarm” [11]. The

project MediaFlies is similar to the works of Blackwell and Shiffman, in that

it employs a sample driven approach to generative art. The system doesn’t

create it’s output entirely de novo but rather by combining human generated

visual and acoustic input with the generative activity of the flock. We are

convinced that this approach yields a very interesting potential for generative

art, in that it tries to combine human and machine based creativity and

aesthetics.

2. Concept

MediaFlies resembles an artist whose creative approach is

based on an analysis and subsequent reconfiguration of source material into an

audiovisual collage. This source material, which stems from an archive of

digital media material and the system's own audiovisual perceptions,

continuously feeds into MediaFlies memory. The memory forms a three-dimension

space, which is populated by a swarm of agent's. These agents continuously

collect and discard memory fragments as they roam this audiovisual memory. Via

their mutual interactions, the agents collectively structure these fragments

into associative patterns, which eventually resurface as amorphous or distinct

features in the constantly shifting stream of acoustic and visual feedback.

MediaFlies is an interactive system in that the visitor can

influence the behavior of the flocking agents. Fidgety visitors disrupt the

generation of recognizable associations up to the point of pure visual and

acoustic noise. Calm visitors promote the formation of highly structured and

organized feedback regions, which eventually reform into the visitors’ own

mirror images.

3. Implementation

MediaFlies is written in C++ and implements a multi-agent

system whose behavior is controlled via a flocking simulation and behavior

synchronization. The agents operate on visual and acoustic data, which is

acquired via life capture and from stored media material. Visual material is

fragmented into small rectangular regions and subsequently recombined via

multitexturing and alpha-blending. The fragmentation and recombination of audio

material is based on granular synthesis. The spatial position of video and

audio fragments is controlled via the movement of the agents. The parameters

controlling the generation of these fragments are influenced via behavior

synchronization. Interaction is based on video tracking and includes both detection

of the visitors’ positions and direction of movements. These implementations

are described in more detail in the following subsections.

2.1 Media Acquisition

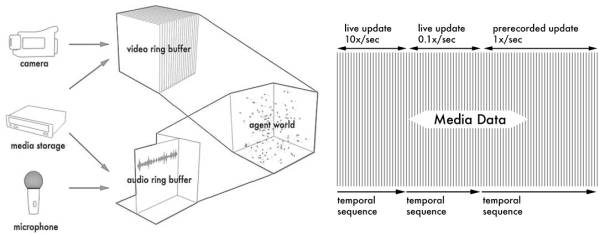

MediaFlies operates on life and prerecorded video and audio

data. Life material is acquired via a standard DV-camcorder and a directional

microphone. This material is subsequently fed into two ring buffers (see figure

1 left image). A video ring buffer stores individual video frames in sequential

order. Similarly, an audio ring buffer stores audio samples. The current

version of MediaFlies segments each ring buffer into several regions, which

operate independently. For this reason, MediaFlies can acquire media material

at a variety of update rates and feed them into different regions of the corresponding

ring buffers (see figure 1 right image).

2.2 Agent System

In MediaFlies, agents populate a 3D world that is continuous

in time and space and which exhibits periodic boundary conditions. Agents

update their neighborhood relationships by employing an Octree space

partitioning scheme. The spatial extensions of the agent world overlap with the

ring buffers (see figure 1 right image).

Accordingly, a position within the agent world corresponds

to a position within each ring buffer. Agents are organized within agent

groups. These groups manage the behavioral repertoire of all agents contained

within. This repertoire consists of a list of basic behaviors, each of which

conducts a single activity (such as moving away from another agent or changing

the duration of a sound grain).

MediaFlies implements several agent groups. A relatively

small group of agents (100 - 400) conducts true flocking. Agents in a

significantly larger second group (500 - 4000) simply move towards the flocking

agents. By this method, a larger number of agents can be simulated than if all

agents would conduct proper flocking. Two additional agent groups are directly

responsible for generating MediaFlies feedback. These feedback agents simply

copy their positions from the second agent group. The parameters that control

the agent's feedback generation are modified via behavior synchronization.

Figure

1: Media Acquisition. Left image: Live and prerecorded audio and video is

continuously fed into ring buffers, which posses the same spatial extensions as

the agent world. Right image: A ring buffer consists of a series of regions,

each of which is updated at different intervals and with different media

material.

2.3 Flocking Simulation

The flocking algorithm is closely related to the original

Boids algorithm [2]. It implements the three basic behaviors of alignment,

cohesion and evasion (see figure 2). The basic repertoire of flocking behaviors

has been extended with two additional behaviors, which control how the agents

respond to interaction (see section 2.7). Every flocking behavior generates a

force that is added to the agent’s overall force vector. At the end of a simulation step, the agent’s

theoretical acceleration is calculated from this summed force. By comparing the

theoretical acceleration with the agent’s current velocity, linear and angular

acceleration components are derived and subsequently clamped to maximum values.

Finally, the agent’s velocity and position are updated by employing a simple

explicit Euler integration scheme.

Figure

2: Flocking Behaviors. From left to right: Alignment: Agents adapt magnitude

and direction of their velocity to the average velocity of their neighbors.

Cohesion: Agents move towards the perceived center of their neighbors. Evasion:

Agents move away from very close neighbors.

2.4 Behavior Synchronization

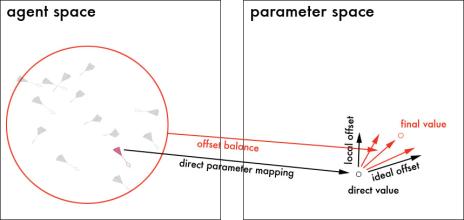

Each feedback agent possesses a set of behaviors that

control the generation of a single visual or acoustic particle (see sections

2.5 and 2.6). Each of these behaviors depends on a set of parameters that

control it’s output. The term behavior synchronization refers to the fact that

neighboring agents constantly shift these parameters in response to each other

towards some optimal value. A high degree of synchronization is achieved

whenever agents possess many neighbors and all neighbors move coherently.

Parameters are implemented as vectors. A parameter vector is

calculated as a weighted sum of a direct vector and two offset vectors (see

figure 3). The direct vector is obtained via a linear mapping of an agent’s

position or movement from agent space to parameter space. The local offset

vector represents the difference between an agent's parameter value and the

averaged parameter value of it’s neighbors. The ideal offset vector represents

the difference between an agent's parameter value and a global ideal value from

which the parameter value shouldn't deviate too much. Prior to addition, these

offset vectors are multiplied by a value that balances each offset vector's

influence on the parameter vector (see equations 1 left equation). A target

local offset vector is obtained by calculating the difference between the

direct and local vector. The updated local offset vector results from shifting

the previous offset vector according to an adaptation rate towards the target

offset vector (see equations 1 right equation). The same principle applies to

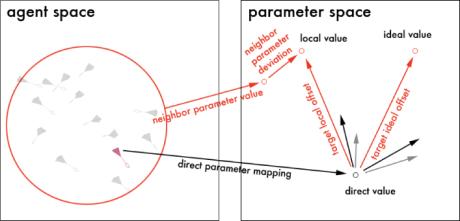

the calculation of an updated ideal offset vector. For some parameters, it is

preferable to maintain a certain difference between parameter values of

neighboring agents. This difference is stored in a further vector (named

“neighbor parameter deviation”) and added to the local vector (see figure 4).

Equations

1: Left Equation: Parameter Vector Calculation. Right Equation: Offset Vector

Update.

Figure

3: Parameter Vector Calculation.

Figure

4: Offset Vector Update. Offset vectors at time t are depicted as unlabeled

gray arrows, offset vectors at time t+1 are depicted as unlabeled black arrows.

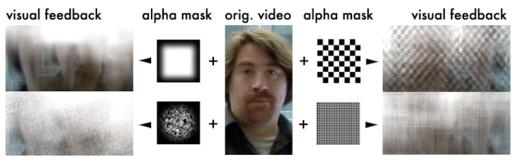

2.5 Visual Feedback

Visual feedback is generated, by blending a multitude of

small rectangular image fragments into a seamless cluster of visual material.

These image fragments represent regions within the video ring buffer. Alpha

blending is controlled via static grayscale images, which act as alpha masks

(see figure 5). Video regions and alpha masks are multi-textured onto

quadrangles, which are implemented as billboards (i,e. they always face the

camera). The choice of alpha mask significantly affects the visual feedback.

High contrast masks cause flickering and interference like effects when

fragments shift across each other (see right two examples in figure 5). Low

contrast and smooth masks lead to “painterly” effects (see left two examples in

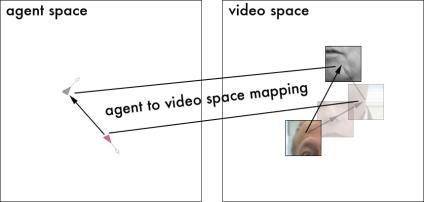

figure 5). Each video feedback agent controls the position and texture coordinates

of a single image fragment. Texture coordinates are implemented as 3D vectors

since they define not only a 2D image subregion but also a temporal position

within the video ring buffer. Texture coordinates are subject to

synchronization. The direct vector is derived from the agent's position. The

local offset vector points towards a position, which deviates from the local

value (see figure 6). This deviation is calculated from the difference in the

agent’s position and neighbor’s position in agent space, and is subsequently

mapped into texture coordinates space. For this reason, synchronization will

eventually recreate the original image material.

Figure

5: Masking and Visual Feedback.

Figure

6: Texture Coordinate Synchronization.

2.6 Acoustic Feedback

MediaFlies employs a similar approach for the generation of

audio and video feedback. Audio material is modified into a continuous audio

stream via a fragmentation and recombination process. This process is based on

a combination of granular and subtractive synthesis. The steps involved in the

generation of audio feedback are depicted in figure 7.

Figure

7: Steps in Audio Processing.

Figure

8: Control Parameters for Audio Generation. Lines and arrows indicate agent

based parameter control. Single and double-headed arrows indicate

synchronization dependent parameter control.

The audio ring buffer is continuously fed with live and

prerecorded audio material. The ring buffer is scanned for audio parts, which

are above a certain amplitude threshold. These parts are labeled as emphasis

regions. Grains are created exclusively from emphasis regions. This approach

prevents audio feedback from becoming inaudible when very quiet audio material

is partially present in the ring buffer. The implementation of granular

synthesis is largely based on a publication by Ross Beninca [12]. Individual

grains are filtered via a bandpass filter and spatialized for stereo or

quadraphonic playback. Each audio feedback agent controls a set of parameters

for the generation of a single audio grain (see figure 8).

Ring buffer tap speed, grain start time, and bandpass filter

center frequency are synchronized among agents. Similar to synchronization of

texture coordinates, grain start times shift towards values that differ among

neighboring agents. Ring buffer tap speed is currently the only parameter,

which possesses a global target value. This target value corresponds to a ring

buffer readout rate that equals the sampling rate.

2.7 Interaction

Visitors can influence MediaFlies audiovisual feedback in

essentially two ways. They provide life video and audio source material for

processing. In addition, their position and movement is detected via video

tracking and affects the behavior of the flocking agents. Tracking is mostly

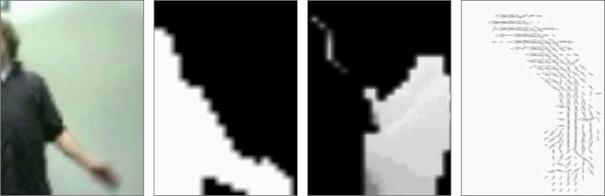

based on standard image segmentation techniques (see figure 9).

The running average method (Picardi, 2004) is employed to

discriminate foreground and background. Movement detection is based on the

calculation of a motion history image, from which gradient vectors are derived.

In order to obtain not only motion direction but also motion magnitude, a

custom-processing step has been included. This custom step elongates motion

vectors along regions of constant brightness within the motion history image.

Video tracking results in the formation of two 2D fields, which are coplanar

with the x and y axis of the agent world and whose values extend along it's

z-axis. Flocking agents react to these fields via corresponding basic

behaviors.

Figure

9: Video Tracking. From left to right: camera input image, background

subtracted threshold image, motion history image, gradient vectors.

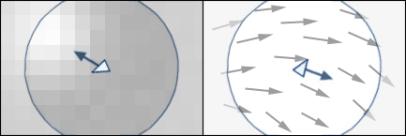

The “attraction field behavior” causes agents to sense

within their perception range scalar values that are derived from background

subtracted threshold images. Highest values in this field correspond to the

foreground and lowest values to the background of the current threshold image.

Intermediate values result from a diminishing influence of previous threshold

images. The force vectors produced by this behavior causes agents to move

laterally towards high scalar values (see figure 10 left image) as well as

towards the front region of the agent world.

The "motion field behavior” applies a force vector to

an agent, which is proportional to the average of the motion vectors within the

agent's perception field (see figure 10 right image). These motion vectors are

identical to the gradient vectors calculated by video tracking.

Figure

10: Interaction Behaviors. From left to right: Attraction field behavior:

agents move towards high values in the surrounding scalar field. Motion field

behavior: agents move into the average direction of nearby motion vectors.

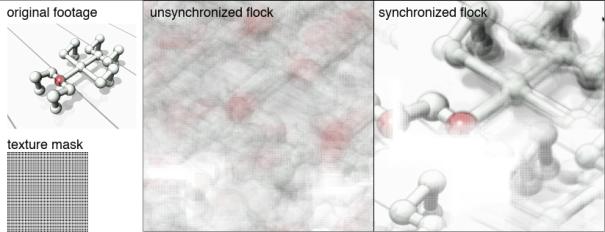

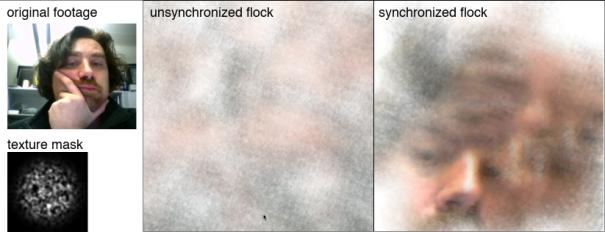

Interaction tends to disrupt the agents’ normal flocking

behavior. Stationary or slowly moving visitors cause agents to slow down and

gather at the visitors’ positions. Quickly moving visitors cause agents to

accelerate and disperse throughout the agent world. Both effects exhibit an

indirect but significant effect on MediaFlies audiovisual feedback. Agents that

occupy the frontal part of the agent world predominantly operate on live video

and audio material. Agents that reside further back use mainly prerecorded

media material. Slowly and coherently moving agents excel in synchronization

and cause the creation of dense and clustered regions in the audiovisual

feedback. These regions clearly reproduce the original media material (see

figures 11 and 12 right image). Fast and incoherent agent movements supersede

synchronization behavior and cause amorphous and diffuse clouds of unrelated

media fragments to dominate audiovisual feedback (see figures 11 and 12 middle

image).

Figure

11: Synchronization of Prerecorded Video Material.

Figure

12: Synchronization of Live Video Material.

3. Results and Discussion

MediaFlies has been shown as an installation at the

Tweakfest festival in Zurich (Switzerland), the AI50 summit on Monte Verita

(Switzerland), and within the exhibition section of the ACE2006 conference in

Hollywood (USA). The installation setup varied from event to event but was

usually placed close to a highly frequented passageway or a bustling public

space such as a cafeteria. The casual environment proved to be ideal for

MediaFlies since it generated rich audiovisual input for live capture. In

addition, the diversity of interaction based agent responses was large, since

both stationary and fast moving visitors intermixed.

The software was run either on a Dual G5 Macintosh or a

MacBook Pro. Visual output was presented via front or rear video projection or

on a large plasma screen. Audio output was fed into a stereo or quadrophonic

speaker setup or delivered via a set of wireless headphones. Video tracking was

conducted via an iSight webcam. Live video material was either obtained from a

second iSight webcam or a miniDV camcorder. Live audio material was obtained

from a shotgun microphone. Concerning prerecorded media material, content was

chosen that reflected the conceptual idea of agents wandering as thought

particles through a space of audiovisual perceptions and memories. Examples of

such material include images of faces and sounds of voices, which represent

personal memories or textural material, which stands for emotional memories.

Figure 13 depicts a few examples of visual feedback that has been generated by

MediaFlies.

Figure

13: Examples of MediaFlies Visual Feedback.

In between these exhibitions, MediaFlies has progressed

through a series of modifications, which took feedback by visitors into

account. Initial reactions were very positive concerning the characteristics of

MediaFlies audiovisual feedback, which seemed to reflect the situation and mood

of it’s environment. During moments of hectic activity when people were rushing

through the passageway, MediaFlies created fast paced movements of highly

dispersed and quickly changing image and audio material (see figure 13 bottom

left). These moments contrasted with more tranquil situations when people were

standing or slowly walking. MediaFlies responded to these type of situations by

creating slowly moving clusters of audiovisual fragments that blended together

into recognizable features of the physical surrounding (see figure 13 top

left). Interaction with MediaFlies proved to be somewhat problematic. Some visitors failed to notice that they

could affect MediaFlies behavior. An even larger number of visitors didn't

understand how MediaFlies feedback correlated with their own behaviors. These

reactions led to the following changes in MediaFlies implementation. The

interaction based 2D fields are visualized as graphical representations and are

part of MediaFlies feedback. The attraction field is depicted as an array of

white circles whose radius is proportional to the attraction values (see figure

13 top left). The vectors in the motion field are depicted as white lines of

corresponding orientation and length (see figure 13 top right). Furthermore,

the interaction dependent agent behaviors are now easily distinguishable from

flocking related behaviors. Agents, which respond to interaction either accelerate

towards much higher velocities than they exhibit during flocking or they become

entirely motionless. These behaviors possess a direct and visually very

distinct impact on MediaFlies feedback.

In it’s most recent incarnation, MediaFlies still suffers from

some technical issues. The computational demands of the system are somewhat

daunting. In order to achieve a high degree of fracturing and blending of audio

and video, a large number of feedback agents needs to be simulated. This of

course conflicts with the desire to display very fluid and smooth fragment

motions, which requires high frame rates. The way texturing is handled also

puts a significant load on the system.

Textures are steadily updated based on new video input and therefore

need to be continuously transferred to the video card. At the moment, the video

tracking system also needs improvement since it is not very robust and requires

a very long background subtraction phase. The desire to combine life audio

capture and audio output obviously leads to feedback problems. By very careful

placement of the shotgun microphone and speakers, feedback can be mostly

eliminated. But in all exhibitions situations, during which we used speakers

for audio output, the spatial restrictions prevented such an optimal setting.

4. Conclusion and Outlook

Based on the feedback we have received so far we believe,

that MediaFlies has succeeded in providing an interesting and aesthetically

fascinating form of audiovisual feedback. This positive feedback was mostly

based on MediaFlies capability to coalesce and dissolve discrete and

recognizable visual and acoustic features within a constantly shifting stream

of seamlessly blended live and prerecorded media material. Interactivity on the

hand turned out to be somewhat problematic, as most visitors didn't realize

that their presence and movements affected MediaFlies behavior. This issue can

partially be attributed to the fact that MediaFlies, by it's very nature,

doesn't respond instantly and in an exactly reproducible manner to interaction.

Rather, synchronization and (to a much lesser degree) changes in flocking

behavior are somewhat slow processes, which only partially depend on

interactive input. It seems that most visitors are used to fairly reactive

installations, which exhibit immediate and obvious input-output

relationships. Nevertheless, we are

strongly convinced that autonomous and generative systems provide very

rewarding forms of interaction as soon as visitors adapt to more explorative

approaches in dealing with artworks.

We believe that the concept and current realization of

MediaFlies has sufficient potential to justify further development. Plans for

relatively short-term improvements concern MediaFlies methods of media

acquisition. In addition to live capture, we would like to implement some form

of automated media selection mechanism. This mechanism is intended to replace

either partially or entirely the current method of manually assigning audio-

and video-files for MediaFlies to work with. For example, MediaFlies may

autonomously select audio and video material from online or offline media

repositories by employing some sort of similarity calculation based on the

current life material. A second, more longterm extension of MediaFlies deals

with the relationship between agent behavior and media material. Currently, the

agents are entirely unaffected by the quality of the media material they

present. We would like to introduce a feedback mechanism between the visual and

acoustic output and the agents’ behaviors. By allowing agents to change their

behaviors depending on the media fragment, which they and their neighbors

present, novel forms of algorithmic media recomposition could be explored. One

possible way to achieve this could consist of letting the media material affect

some of the physical or behavioral properties of the agents. This effect can be

based on structural or semantic properties of the media material. Using such a

system, agents may for instance learn structural and statistical properties

present in one type of input media and try to reconstruct these properties when

presenting a different type of media.

4. References

[1] Sommerer, C., and Mignonneau, L. 2000. Modeling Complex

Systems for Interactive Art. In Applied Complexity - From Neural Nets to Managed

Landscapes, 25-38. Institute for Crop & Food Research, Christchurch, New

Zealand.

[2] Reynolds, R. W. 1987. Flocks, herds, and schools: A

distributed behavioral model. Computer Graphics, 21(4):25-34.

[3] Ramos, V, Moura, L. and Pereira, H. G. 2003. ARTsBot –

Artistic Swarm Robots Project. http://alfa.ist.utl.pt/~cvrm/staff/vramos/Artsbot.html

[4] Blackwell, T. 2003. Swarm music: improvised music with

multi-swarms. Artificial Intelligence and the Simulation of Behaviour,

University of Wales.

[5] Blackwell, T. and Jefferies, J. 2005 . Swarm Tech-Tiles

Tim. EvoWorkshops, 468-477.

[6] Blackwell, T. and Young, M. 2004. Swarm Granulator.

EvoWorkshops, 399-408.

[7] Unemi, T. and Bisig, D. 2004. Playing music by

conducting BOID agents. Proceedings of the Ninth International Conference on

Artificial Life IX, 546 - 550. Boston, USA.

[8] Unemi, T. and Bisig, D. 2005. Music by Interaction among

Two Flocking Species and Human. Proceedings of the Third International

Conference on Generative Systems in Electronic Arts, 171-179. Melbourne,

Australia.

[9] Unemi T. and Bisig D. 2005 . Flocking Orchestra.

Proceedings of the 8th Generative Art Conference. Milano, Italy.

[10] Boyd, J.E., Hushlak, G., and Jacob, C.J. 2004,

SwarmArt: interactive art from swarm intelligence. Proceedings of the 12th

annual ACM international conference on Multimedia. 628-635. New York, NY, USA.

[11] Shiffman, D. 2004. swarm. Emerging Technologies

Exhibition. Siggraph, Los Angeles, LA, USA.

[12] R. Beninca, 2001, Implementing Real-Time Granular

Synthesis. To appear in K. Greenebaum and R. Barzel (Eds.), Audio Anecdotes

III, A. K. Peters, Ltd.