Musical Score Generation

Dr. David Kim-Boyle,

PhD.

Department of Music, University of Maryland, Baltimore County, Baltimore, U.S.A.

e-mail:

kimboyle@umbc.edu

Abstract

The author describes work on a recent composition for piano and computer in which the score performed by the pianist is generated in real-time from a vocabulary of predetermined scanned score excerpts. The author outlines the algorithm used to choose and display a particular score excerpt and describes some of the musical difficulties faced by the pianist in a performance of the work.

1. Introduction

Valses and

Etudes is

a recent work for piano and computer in which the score performed by the

pianist is generated in real-time during performance from a variety of

predetermined scanned score excerpts. Like many of the author’s recent pieces,

the musical source materials are taken literally from pre-existing works. For Valses and Etudes, this vocabulary

includes Movement VI of Schoenberg’s 6 Kleine Klavierstücke Op. 19, the Second

and Third Movement of Webern’s Variationen für Klavier Op. 27, several of

Ravel’s Valses Nobles et Sentimentales, John Cage’s One 5, Chopin’s Nocturne

No. 6, Op. 15 Nr. 3, and Debussy’s Preludes No. 10 (“La cathedrale engloutie”),

Book One. A number of additional pieces are performed by the computer at several

points in the work but are not called upon in the score generation process. The

score selected for performance is conditioned by Markov chain probabilities and

the actual score excerpt displayed for the pianist is determined through a

Jitter patch. This excerpt is not fixed, but dynamically varies during the

performance. Unlike, previous works of the author in which the original source

materials are extensively processed, in Valses

and Etudes, very little audio processing of the materials takes place. Rather,

the musical complexity lies in the simultaneous performance of up to twelve or

so pieces by the computer, and the interaction within this musical tapestry by

the pianist.

2. Score Generation Method

The first two pages of the scores for the pieces

noted above were scanned and edited in Adobe Photoshop. They were then saved as

high resolution JPEG files for further processing in the Jitter

environment. Jitter is a set of

external graphical objects for the Max/MSP programming environment which allows

live video processing and other graphical effects to be integrated into the MSP

audio environment. [1] In Valses and

Etudes, Jitter is used to frame particular score excerpts. This framing

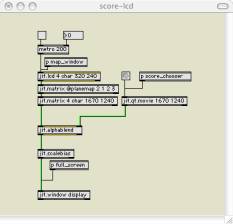

patch is illustrated in Figure 1.

Figure 1: Framing in Jitter

A small, random window is generated with the jit.lcd

object and the values are mapped to the alpha channel. The matrix is expanded

to the same resolution as the currently selected score and the window is then

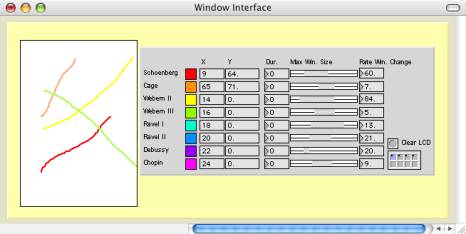

blended with the jpeg score file. A typical result is illustrated in Figure 2.

Figure 2: Typical framing result

An obvious feature of Figure 2 is that the image

needs to be rotated 90 degrees clockwise. This is not done in Jitter in order

to maximize the display size of the image. Rather, for performance, the monitor

from which the pianist reads from is simply turned on its side on the piano.

This necessarily involves some creative mounting. Another particularly

important consideration is the need to obtain the maximum possible clarity of

image given that the screen resolution will not be able to match the print

resolution of a traditionally printed paper score.

The windows mapped by Jitter are not fixed, as

implied in Figure 2, but dynamically change during performance. Their size,

rate of size change, trajectory across the score page, and speed of trajectory

movement are all definable. Each of these parameters can also be randomized.

Clearly, different rates of change will produce qualitatively different

interpretations. The interface used to define these values is shown in Figure

3. Note that trajectory paths for all eight possible score selections are

defined with the one lcd object and can be determined during or prior to the

performance. The author has also experimented with Jean-Baptiste Thiebaut’s trajectory object to help define

trajectory paths. [2] This object requires a different implementation but

facilitates geometrical trajectories which are not easily obtainable with the

implementation of Figure 3.

Figure 3: Window size/speed/trajectory interface

In Valses and

Etudes, source scores are selected during performance extemporaneously by

the computer operator. Score selection is based on a first order Markov Chain

algorithm where probabilities are determined with eight multisliders. The

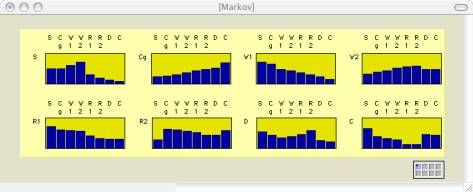

interface for this is illustrated in Figure 4.

Figure 4: Markov chain interface

Each multislider object represents one score

performed by the pianist. The eight sliders of each multislider represent the

probability that another score will follow. For example, the top left

multislider determines the probabilities that another score will follow an

instance of the sixth movement of Schoenberg’s 6 Kleine Klavierstücke. There is

around a 50 percent probability that the Schoenberg score will be followed by

the Schoenberg score, or the Cage score a slightly higher probability that it

will be followed by the two Webern scores, and a decreasing probability that it

will be followed by the Ravel, Debussy, or Chopin scores. Each score excerpt

displayed is faded to digital black before the succeeding score is displayed.

3. Musical Difficulties

One of the obvious difficulties the pianist faces in

performing Valses and Etudes is the

need to learn eight individual pieces. This prospect is made somewhat less daunting

given that only the first two pages of each piece need actually be learned and

that the pieces chosen are not particularly demanding technically. The source

pieces are also, generally speaking, musically sparse which helps the pianist

blend more homogenously with the works played by the computer.

One of the more subtle difficulties is raised from

going from one publisher’s edition of a particular score to another. This could

easily be avoided in practice, however, by simply transcribing all of the source

scores into Finale and from there producing visually consistent manuscripts

from work to work.

A more obvious difficulty is the effect of the windowing process on the pianist’s interpretation of each of the source pieces. This is particularly challenging for the pianist as it goes against much in the way of traditional performance practice. To be faced with a score that is dynamically changing during performance or where only a fragment of the score may be visible forces the pianist to abandon, to a certain extent, traditional interpretative concepts of form and development.

4. Max Algorithm and Future Development

In the original form of the work, the order in which

the piano works were played back by the computer was fixed. In more recent variations of the piece, the

author has begun experimenting with more open form Max patches that

stochastically determine work selections. One of the first efforts at this is

based on an extension of the first order Markov chain process outlined in

Section 2.

The author is also extending the work realized in Valses and Etudes in the composition of

a new work for voice and Max/MSP/Jitter. In this work, the text sung by the

voice will be generated live in a manner similar to that outlined above but

will also employ rule-based algorithms that do not require predetermined texts.

This latter technique seems a particularly interesting way to introduce a

spontaneous element to the performance. Also being explored is a more

responsive musical process whereby the performer is not simply responding to

events determined by the computer but becomes more of a musical instigator. In

regard to the new work being developed, this might involve the use of speech

analysis and recognition to help determine musical events.

5. References

[1]

D. Zicarelli, “An Extensible Real-Time Signal Processing Environment for MAX,”

in Proceedings of the 1998 International

Computer Music Conference, Ann Arbor, MI: International Computer Music

Association, pp. 463-466, 1998.

[2]

J.-B. Thiebaut, <http://www.mshparisnord.org/themes/EnvironnementsVirtuels/00070887-001E7526>. April 2004.