Experimenting with Chambre

: Music, Image and Interaction

Prof. Paolo G. Bottoni, PhD

Stefano Faralli

Prof. Anna Labella

M° Claudio Scozzafava

Department of Computer Science, University of Rome “La Sapienza”, Italy

Abstract

We

present Chambre, a distributed

architecture able to accommodate several tools for generative and interactive

artistic production: from different generative techniques, to translators

towards different multimedia formats, from support to interaction with the

performer to coordination tools to guarantee multimedia effects. We illustrate

the use of Chambre through examples of some multimedia events

realized within its framework.

1. Introduction

Starting from the almost naive

remark that music is a sort of intermediate object between a formal and a

natural language, it is worth

attempting to use for it a generative approach in the vein of formal languages.

Such

a formal approach can leave room to preserve creativity both by leaving with

the composer the responsibility for establishing the generating rules and/or

controls, and by allowing the composer to actively interfere in the generation

process, through the use of interactive devices. This way, automatic generation

can support creativity on the expressiveness side.

Although

a formal approach is favoured in the case of the musical language because of

its higher abstractness, there have been similar approaches in many other

fields of creative arts, from painting to installations, to live performances.

Referring

to formal languages for the automatic generation of creative artefacts allows

us to consider the different concrete kinds of language (musical, iconic and

others) as different interpretations of a unique, more general, abstract

language. This suggests that it might be interesting to try and transfer

techniques and methods from one artistic field to another. This approach, well

known in ancient times (e.g. the use of analogy in the Middle Ages or the

encyclopaedic approach to culture by Athanasius Kircher [14]), is now an aspect

of the multimedia culture.

In

our line of research, rather than focusing on specific generation techniques,

we propose an open framework where different algorithmic, rule-based, or

biologically inspired generation techniques can be accommodated. In this

perspective, which calls for original architectural solutions, our focus is on

translation and interpretation techniques which can be considered as relatively

independent of the specific generation techniques. A second fundamental aspect

of our proposal is the coupling of generation and translation tools with

interaction and coordination techniques. Indeed, as the interpretation of the

sentences in one specific concrete language can be multiple, and there is no

privileged translation, the whole process can develop through phases where each

language in turns provides the starting point for further generation acts to

be translated towards the other languages. Such is the case when a musical

sentence is mapped to an abstract representation describing the pattern of

relations between consecutive musical events, and the identification of such

patterns steers the generation of graphical effects as modification of some

basic form. Conversely, the process can be steered by some external agent (a

man, a process or other) whose inputs are acquired through external devices.

For example, a Webcam can capture the movements of a performer, and image

analysis tools can interpret these so as to generate parameters steering the

generation process.

All

these concepts: generation through automatic process, coordination of several

generation and translation processes, management of interaction, have been

incorporated into Chambre, a

software architecture enabling the use of multimodal inputs to produce multimedial

objects with a hypermedia and virtual structure. Chambre can be exploited by designers of multimedia applications

or performances to realise artefacts whose users may enjoy a multisensorial

experience. Chambre is able to

support different, even variable in real-time, interpretations of the same

inputs, possibly steered by interaction with human or other software sources.

This ability makes Chambre a

flexible and open tool, easily adaptable to other situations and

applications.

The rest of this paper is structured as follows: We first present Chambre architecture in Section 2. Section 3 discusses aspects of the construction of multimedia performances with Chambre, while Section 4 illustrates some experiences with it. Finally, Section 5 illustrates work related both to architectural and artistic aspects, before drawing conclusions in Section 6.

2. Chambre

architecture

Chambre is an

open architecture allowing the configuration of several processing units, into

networks of multimedia tools, able to exchange information. Each multimedia

object is able to receive, process and produce data, as well as to form local

networks with its connected (software) objects. In Chambre, communication can thus occur on several channels,

providing flexibility and robustness.

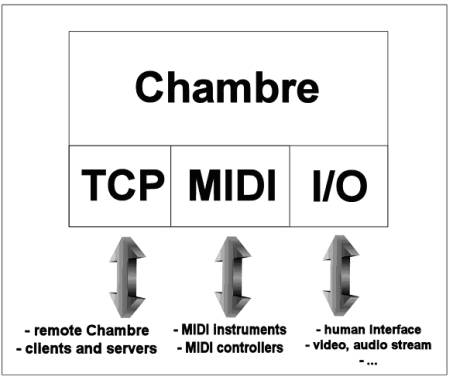

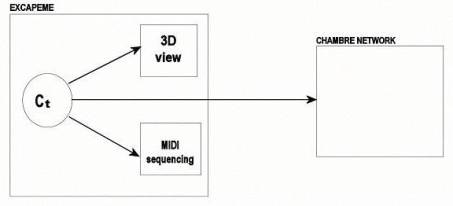

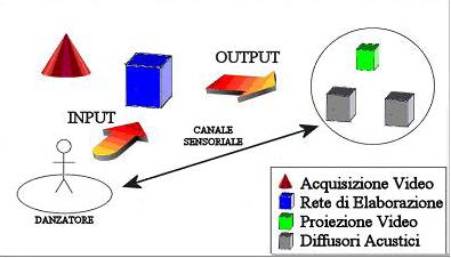

Figure

1 shows the relation between Chambre and

its input and output channels.

The design of Chambre is focused about a notion of interoperability, so that communication between software elements occurs on simple channels allowing the transfer of a simple form of communication, namely formatted strings, with a proper encoding of the type of request and/or information which is transmitted. Special channels can also be devised, as is the case of the use of MIDI channels in the application illustrated in this paper.

Figure 1: Communication between Chambre and the external world.

A

Chambre application is typically

built by interactively specifying a dataflow graph, where edges are

communication channels, and nodes are processing units exposing ports. These

ports can be adapted to receive channels from within the same subnet, or

through TCP or MIDI connections. Specific components can act as synchronizers

among different inputs, thus providing a basic form of coordination. A further

form of coordination is given by the adoption of the Observable-Observer

pattern for communication between a data producer and its renderers. Hence, a Chambre, which is connected to the rest

of the network via its channel interface, is left autonomous as regards the

production of outputs towards the user and interaction with it.

These

architectural choices make Chambre particularly

suited for dynamic reconfiguration, typically driven by interaction. The

supported forms of reconfiguration are substitution, addition or removal of

producers or translators of data at either end of a communication channel.

These can occur during the execution of a performance, by temporarily suspending

the processing of some components, while the rest of the network can proceed

working.

3. Construction of multimedia performances

Chambre can be

used for the construction of multimedia performances in which performers can

interact with generative tools to steer their computations, or to define

translations of the outputs of these tools into multimedia events.

Interaction

can occur at different levels. First, the performer can reconfigure the network

to substitute some generative devices or some rendering tools with others.

Second, one can act on the generative devices or on the rendering tools

modifying their state or some parameters. Third, one can modify the types of

translation from the generated data to the presentation.

In

all these cases, interaction can occur in an explicit or in an implicit way. An

explicit interaction occurs through direct manipulation of representations of

the affected level, e.g. the network graph for reconfiguration or the rules or

the state of a generative tool, such as a cellular automaton, or the volume

levels of a MIDI output device. An implicit interaction consists of capturing

modifications induced by the performer on the environment, and translated

through suitable scripts into modifications of the network, or of the

parameters under which the generative tool works, or of the mapping between

inputs and outputs of a rendering device connected to a generative one.

For

example, one can modify the rules presiding to the evolution of a cellular

automaton which provides inputs for a Chambre

network. Such an effect can, however, also be reached by making the data

structure of the rules amenable to manipulation not only through direct user

interaction but also through the adaptation of rule parameters.

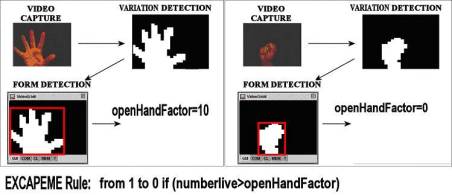

As an example, consider the rule of Figure 2, which describes how the action of a performer can be integrated into a rule of a cellular automaton, by combining the recognition of particular gestures with a check on the current configuration of a cell.

Figure 2: An example of integration of performer

interaction with state

change in cellular automata.

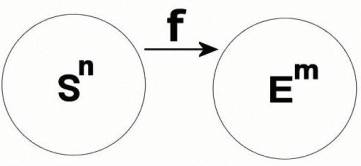

After

generation of the current configuration, interaction can affect different

aspects of a translation. We exploit a generic model in which a formal

generative device provides symbols that a translation mechanism maps to events

rendered through some medium, as represented in Figure 3, where both the input

and event space can be multidimensional ones. With reference to this abstract

view of the translation process, one can think that interaction can occur on

three aspects:

- the definition of

the domain, thus applying transformation functions on the set of symbols Sn

- the selection of

the translation function f to be applied

- the definition of the codomain, thus modifying the resulting events as produced by the f function.

Figure 3: An abstract view of the translation

process.

3.1 Generative tools

A

typical example of generative tool is represented by cellular automata (CA). A

cellular automaton is defined as a potentially infinite grid of cells, each

occupied by an identical replica of a Moore automaton with a finite set of

states Q. The evolution of the grid

is synchronous: at each step each cell performs a state transition, based on

its current state and the current state of its neighbours (where the definition

of neighbourhood can vary).

A

designated state q0 in Q is called the quiescent state. The law of evolution is usually such that if a

cell is in state q0 and it

only has quiescent neighbours, it remains in q0. Hence, if we start from a configuration of the grid

with only a finite set of non-quiescent cells, at each step the set of non-quiescent

states will remain finite. We call Core

the subset of non-quiescent cells. The configuration of Core at each time step can be used as input for a rendering tool.

The usage of CA in the context of multimedia production requires that a sort of

producer-consumer pattern is realised, where the CA is allowed to progress only

when the content of Core has been

processed by the rendering devices. We have exploited CA by interfacing Chambre with ExCAPeME, a system for

managing, interacting and presenting CA, described in Section 4.1.

Other

approaches we have been experimenting with include the use of rewriting rules,

stochastic methods, such as Markov processes, and constraint systems, such as

those defined by patterns (in the sense of formal languages [5]), or

synchronisation mechanisms, as those provided by complementarity (in the sense

of DNA computing [4]).

The

system XMUSIC [8] integrates several techniques, by allowing, through a

graphical interface, the definition of networks of modules in which each module

acts as producer of sequences of musical events or as a selector or a

constraint on the products of other modules.

In

particular, one can express constraints on the composition, for example

depending on the need for synchronisation of different polyphonic lines (vertical controls), or on compliance

with the laws of tonal composition (horizontal

controls). Hence, for any given development stage of a musical

composition, there is a finite set of possible Follow sets, i.e. sets of musical events which represent admissible

developments of what produced up to that stage. Besides vertical and horizontal

controls, XMUSIC includes local controls, (i.e. restricting the analysis to a

fixed number of previous events in the style of Markov chains), patterns to

express constraints on the global shape of the composition (e.g. repetitions),

and the possibility of introducing variations.

3.2 Musical and Graphical rendering

The

component-based architecture of Chambre allows

the integration of several translators able to produce representational events

from heterogeneous data sources, both static and dynamic. Such software may be

new components explicitly designed for Chambre,

or existing applications receiving input on some standard channel.

In

particular, Chambre is able to

communicate data on several communication ports, such as System I/O, TCP,

MIDI, or RTP, the protocol included in the Java Media Framework for video

streaming.

Communication

through the TCP/IP protocol supports the distribution of the computation either

physical, e.g. through different installations of Chambre or logical, e.g. by communicating with other

software, e.g. MaxMSP [1] either to receive data, or to handle particular types

of representation. In case Chambre

is used as the rendering environment, i.e. it receives data from other sources,

it can transform them to the desired format and then apply transformation operations.

A

number of operations have been defined and are directly available to a Chambre user. In particular, a number of

image processing operations have been defined. Among them, we mention here:

- VariationDetection: evaluates differences with respect to a

reference image. Typically used to identify movement of objects with

respect to a background.

- FormDetection: identifies regions of connected pixels whose colour is different

from a reference colour.

- MaxScanLine: follows local maxima in the content of an image.

- CromaKey: cuts an image with

respect to a threshold and a reference image

- KeyOverlay: which overlaps an image to another without drawing areas which

are of the same colour as a reference one.

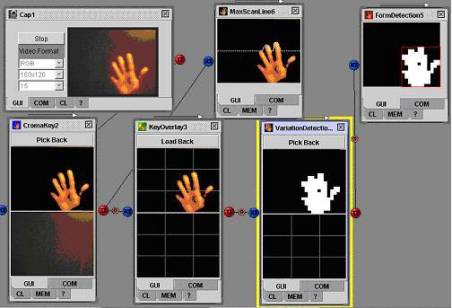

An example of a Chambre network exploiting some operators is shown in Figure 4, where image operators are connected through Rx and Tx ports and each component presents its own interface, either to show the result of the elaboration, or to allow interaction with the operator parameters.

Figure 4: An example of a Chambre network, connecting different

image processing operators.

4. Past experiences

Our group has been experimenting with architectures for the

production of multimedia events for some time now. In this section we

illustrate some of the products of this experimentation.

4.1 ExCAPeME

ExCAPeME

(Extended Cellular Automata with Pluggable Multimedia Elements) is an

immediate precursor of Chambre and can be integrated with it [3]. It is an open

environment which provides support to the interactive definition, editing, and

execution of CA up to 3 dimensions, and which is able to manage simultaneous

rendering of a CA using different forms of presentation. It provides two main

extensions to the basic model of cellular automata: First, it allows

neighbourhoods to have any size, i.e. one can specify a dependency on some

element both at a relative position with respect to the current cell and at a

fixed place. The neighbourhood and the evolution law are however uniform over

the whole universe.

Second, it introduces time in the laws, so that a law can take into account the number of time instants an automaton has remained in the same state.

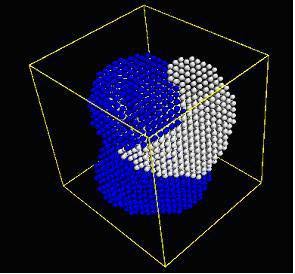

Figure 5: A representation of a 3D cellular automaton

simulating the diffusion behaviour of two gases

Any

type of multimedia presentation can, in principle, be attached in the form of a

plug-in. Support for 1D, 2D, 2.5D and 3D presentation is directly provided (see

Figure 5) and sound rendering can be applied to CA of any dimension. The

mapping between CA configurations and their actual rendering can also be

defined. It is possible to exploit ExCAPeME both as a standalone application

and as a node of a distributed Chambre environment.

In this case, the cellular automata managed by ExCAPeME act as generative tools

and the built-in graphics and MIDI rendering tools can produce effects on

their own, while data describing the current configuration of the cellular

automaton can be sent via a TCP/IP stream to Chambre

for more sophisticated

translations, according to the scheme of Figure 6.

Figure 6: ExCAPeME acting simultaneously as a standalone

multimedia system and as a generator for a Chambre

environment.

Although ExCAPeMe provides several tools for explicit

interaction with the state of the cellular automata and the definition of the

parameter translations, it was not used for implicit interaction by some

performer.

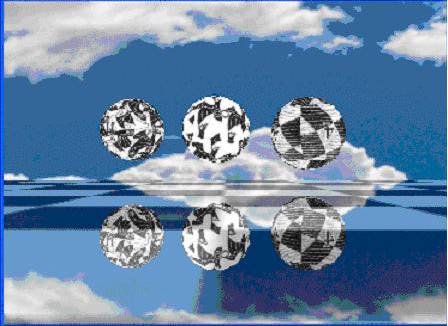

4.2 Metamorphose

A

step in this direction was represented by the system set up for Metamorphose,

a performance in which a human player affected, by executing a musical

composition for clarinet, the generation of video synchronized musical events.

The system consisted of the integration of the MaxMSP software for audio generation

into the Chambre architecture, via

a suitable wrapper. The dynamics of the sound played by the human provided

real-time data for video and music management. When the sound dynamics

exceeded some thresholds, defined according to the different phases of a

composition, some recorded musical events were activated or disabled. Figure 7

shows an example of a figure generated during the performances, where the

metamorphic figures are projected onto rotating polyhedra.

Figure 7: A picture produced during the Metamorphose

performance

In Metamorphose the audio channel played a prominent role,

and the sequence of images was predetermined, up to possible rendering

effects, and synchronised with the musical events. Hence, the spatial

deployment of the performance had no role in it.

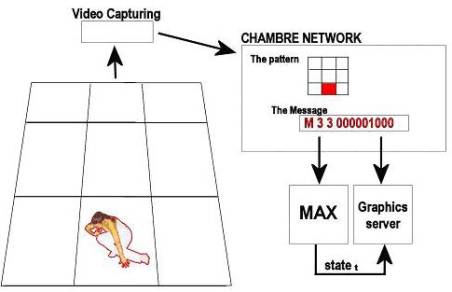

4.3 Matrice1

Spatial

aspects were taken into consideration in the Matrice1 performance, which

represents the most complex usage of Chambre

to date, exploiting different types of interaction. The domain Sn of the generated inputs is a matrix

M of size 3´3, abstractly

representing the spatial grid in which three dancers could be positioned during

the performance, as captured by a video camera (see Figure 8).

The

patterns corresponding to the position of the dancers in the captured area are

considered along time, so that the translation function f(M(t)) from grid configurations in time to parameters for event

creations, is indeed a function g(M(t),M(t-1),...,M(1),M(0)),

i.e. a function of the story of their positions. Here, MaxMSP is used as an

algorithmic string translator, steering a deterministic finite state automaton,

where transitions occur upon the identification of well defined patterns. The

new state is communicated to the ”Graphics server”, thus realizing an

integrated management of algorithmic procedure and audiovisual material.

Figure 8: A schematic representation of the Chambre environment for Matrice1

Figure

9 shows the spatial organization of devices for a Matrice1 performance. A

Webcam is positioned at a height of 4 meters, capturing movements in a cone.

The video signal is transmitted to a Chambre

network where the dancers’ positions are identified by image processing

operators and used to produce video and audio events. The video is projected

onto a screen in front of the dancers, while the audio is diffused through

lateral speakers.

Such

a configuration allows a bidirectional communication between the dancer and the

audiovisual context. If the dancer’s movement induces environmental variations,

these affect the artistic performance, thus realising a multisensorial loop

(see Figure 10 through the audio and visual feedback).

The important feature of Matrice1 is that implicit interaction, realised through the positions of the dancers affects both the graphics and the music generation in real time, but the exploitation of the space is restricted to the bidimensional grid onto which their positions are mapped.

Figure 9: A schematic representation of the

interactive system involving dancers and Chambre

tools.

Acquisizione

video = Video capture

Rete

di elaborazione = Elaboration network

Proiezione video

= Video projection

Diffusori

acustici = Loudspeakers

5. Related work

Chambre architecture

allows a component-based style of programming, where components are endowed

with communication interfaces and a system results from the connection of

different interfaces. This can be interactively specified by the user by

composing a flowgraph. In this related work section we will consider both

aspects related to Chambre

architecture and aspects related to computer-based production of artistic

material.

Flowgraph

models have often been used for the definition of configurable architectures,

due to the facility with which a user can devise and define the dependencies

between elements. An example in the field of the construction of user interface

development environments is Amulet [16], where elements are connected by a web

of constraints. Our proposal is in the style of the LabView [2] or Prograph [7] tools, where connections

between elements describe passage of data.

Frameworks for the construction of distributed (object-oriented) systems have usually focused on passage of messages (actually remote procedure calls, or method invocation), rather than on data. In these frameworks, the necessity for creation of stubs and proxies usually does not favour reconfiguration. The problem of reconfiguration has been addressed by Coulson et al., by proposing a component-based definition of the middleware itself [6]. Their solution involves an explicit representation of dependencies to determine the implications of removing or replacing a component. In our approach, components describe the type of data they can produce or receive, so that the creator of a network of Chambre components can redefine it by preserving the correctness of type connections. On the other hand, since a component not interested in some input will simply ignore it, we can live with the generation of inconsistent connections.

Figure 10: A schematic view of the multisensorial

loop.

Several

researchers and artists are devising ways to employ multimedia tools as

creative devices. We draw the attention of the reader to some experiences

which are more closely connected to the application depicted here. Cogeneration

of graphics and music has been the objective of [13, 11], where, however, one

medium took control of the other. Flavia Sparacino’s work explores the use of a

dancer’s movements as a way to govern an orchestra of instruments virtually

associated with the dancer’s body parts [17]. This requires a predefinition of

the mappings between movements and controls, whereas Chambre allows dynamic reconfiguration as well as

intervention of other sources for parameter control. Fels et al. have proposed

MusiKalscope as a graphical musical instrument, combining a virtual

kaleidoscope with a system for generating musical improvisations, and provide

the performer with virtual sticks through which to drive effects in the two

media [10]. Also in this case, the coupling of the two subsystems appears to be

a rigid one.

The

spatial distribution of performers is stressed in the distributed musical

rehearsal environment [12], where an orchestra conductor and the players can be

physically removed by one another. The peculiarity of the task, however, makes

it necessary the use of specific solutions to preserve the spatial perception

of the music sources, which are not necessary here.

6. Conclusions

We

have illustrated a line of development towards the generation of multimedia

artistic experiences through the interaction with formally based generative

tools and steering of translation processes mapping the generated

configurations onto audio and visual events. This line is supported by an open

architecture providing a flexible framework for the inclusion of new

types of generators and multimedial events.

In

this line we have progressively expanded the role of interaction, both with the

human performers and among software components. In particular, moving from

purely explicit interaction (in the ExCAPeME environment), we have allowed for

different types of implicit interaction, involving both audio (Metamorphose)

and spatial (Matrice1) sources, and acting both on generation parameters and on

activation of scripts at specific synchronisation points.

It

seems natural to move towards the integration of sources of different nature

within a single environment, which poses interesting problems as concerns their

coordination, and towards a fuller exploitation of the spatial domain. In

particular, we plan to move towards capturing 3D events produced by the performer.

In

any case, we are interested in researching on the relations between space and

time in the generation of the sentences that are represented as multimedia

events.

This

also invests perceptual and cognitive aspects. Indeed, sounds and images do

not only pertain to different sensorial channels, but also call into actions

different types of memory. Objects are seen synchronously, but sounds are listened

to in a strictly sequential matter. At different moments of the history of

music, the introduction of some form of spatiality in music to help memory and

manipulation was considered [9, 15].

It would be therefore interesting the formal structures under which spatial events can be mapped to musical effects, and conversely musical inputs have spatial consequences. On the other hand, the need for a coordination of the two aspects also impacts the choice of the generative tools, by considering as privileged those which are able to produce configurations which give rise to significant developments both when interpreted in a sequential way and when interpreted synchronically.

References

[1] Cycling ’74: Max/MSP for Mac and Windows.

Technical Introduction.

[2] LabView: The software that powers virtual

instrumentation. http://www.ni.com/labview/.

[3] P. Bottoni, M. Cammilli, and S. Faralli.

Generating Multimedia Content with Cellular Automata. IEEE MultiMedia,

11(4):78–83, 2004.

[4] P. Bottoni, A. Labella, V. Manca, and V.

Mitrana. Superposition Based

on Watson-Crick Complementarity. to appear.

[5] P. Bottoni, A. Labella, P. Mussio, and G.

Paun. Pattern Control on

Derivations in Context-Free Rewriting. Journal of Automata, Languages and

Combinatorics, 3(1):3–28, 1998.

[6] G. Coulson, G. S. Blair, M. Clarke, and N.

Parlavantzas. The design of a configurable and reconfigurable middleware platform. Distributed Computing,

15(2):109–126, 2002.

[7] P.T. Cox, F.R. Giles, and T. Pietrzykowski.

Prograph: a step towards liberating programming from textual conditioning. In

Proceedings of the 1989 IEEE Workshop on Visual Languages, pages 150–156. IEEE

CS Press, 1989.

[8] F. D’Antonio. Linguaggi di pattern e regole

di sincronizzazione nello sviluppo di un sistema di composizione musicale

assistita. Master Thesis in Informatica, Università La Sapienza, Roma, 2002.

[9] M.E. Duchez. La représentation spatio-verticale

du caractère musical grave-aigu et l’elaboration de la notion de hauteur de

sons dans la coscience musicale occidentale. Acta Musicologica, LI:54–73, 1979.

[10] S. Fels, K. Nishimoto, and K. Mase.

MusiKalscope: A graphical musical instrument. IEEE MultiMedia, 5(3):26–35, 1998.

[11] Ma. Goto and Y. Muraoka. A virtual dancer. ”Cindy” — interactive performance

of a music-controlled CG dancer. In Proc. of Lifelike Computer Characters,

1996.

[12] D. Konstantas, Y. Orlarey, O. Carbonnel, and

S. Gibbs. The distributed musical rehearsal environment. IEEE Multimedia,

6(3):54–64, 1999.

[13] A. Kotani and P. Maes. An environment for

musical collaboration between agents and users. In Proc. of Lifelike Computer

Characters, 1995.

[14] A. Labella and C. Scozzafava. Music and Algorithms: a historical

perspective. Studi

musicali, 32(1):3–50, 2003.

[15] G. Mazzola. The Topos of Music. Birkhäuser,

2002.

[16] B. A. Myers, R. G. McDaniel, R. C. Miller, A.

S. Ferrency, A. Faulring, B. D. Kyle, A. Mickish, A. Klimovitski, and P. Doane.

The Amulet environment: New models for effective user interface software

development. IEEE Trans. Softw. Eng., 23(6):347–365, 1997.

[17] F. Sparacino, G. Davenport, and A. Pentland.

Media in performance: Interactive spaces for dance, theatre, circus, and museum

exhibits. IBM Systems Journal, 39(3 & 4):479, 2000.