Flocking Orchestra - to play a

type of generative music by interaction between human and flocking agents

Prof. T. Unemi, BEng, MEng, DEng.

Department of Information Systems Science, Soka

University, Tokyo, Japan.

e-mail: unemi@iss.soka.ac.jp

Dr. D. Bisig, BSc, MSc, PhD.

Artificial Intelligence Laboratory,University of

Zurich, Switzerland.

e-mail: dbisig@ifi.unizh.ch

1. Concept

"Flocking Orchestra" is an interactive installation that

employs flocking algorithms to produce music and visuals and thereby creates an

interaction between artificial and real life [1]. The artifical life aspect

conists of agents which behave basically according to BOIDís rules [2] and form

complex flocking groups in a virtual 3D space. The users who represent the real

life can interact with the agents through their motions, which are tracked by a

video camera. Each agent controls a MIDI instrument whose playing style depends

on the agent's state. In this system, the user acts as a conductor who

influences the flock's musical activity. In addition to gestural interaction,

the acoustic properties of the system can be modified on the fly by using an

intuitive GUI. The acoustical and visual output of the system results from the

combination of the behaviors of both the users and the flock. It therefore

creates on the behavioral level a mixing of natural and artificial reality.

The system has

been designed to run on a variety of different computational configuration

ranging from small laptops to exhibition scale installations. This type of

scalability allows people to experience the installation not only at exhibition

sites but also at home.

2. Insight

The basic mechanism to realize flocking behavior consists of the

calculation of an acceleration vector for each agent during each time step.

This vector results from the combination of three types of forces: collision

avoidance with other agents and the boundary surfaces, velocity alignment with

other agents, and attraction toward the center of gravity of other agents. Each

agent perceives the agents of the same species only within a limited perception

area. In addition to these three basic flocking forces, there exists a forth force

which causes attraction towards the front surface of the virtual space. This

force is applied to an agent when it detects motion in the real world. This

motion is calculated by calculating the difference in the color values at each

pixel location between two successive camera images. By tuning the parameters,

such as the balance among the forces and the minimum value of the velocity, it

is possible to realize a variety of flocking.

The appearance of the agents can be adjusted by modifying both their shapes

and colors. The current version provides six choices of shapes: square, sphere,

paper plane, paper crane, ASCII faces, and hexadecimal ID. Two color values can

be freely assigned to agents of each species. These two color values are then

mixed depending the amount of motion that has been detected by the agent. An

alternative way of choosing colors consists ion the assignment of a fixed

single color that has originally been sampled from Japanese Origami

paper.

It is also possible to change the background images covering the bounding

planes of the virtual space. Each surface can be filled by either a uniform

color, an image retrieved from a file, or an image that has been captured from

live video. Finally, it is also possible to cover the entire background by a

single image instead of displaying the five textured bounding planes.

The agents are divided into two species: the melodists and the

percussionists. As the names indicate, these species play different types of

instruments. A melodist plays a tonal instrument by mapping its position to the

parameters for a note. Itís vertical coordinate is mapped to the noteís pitch,

itís horizontal coordinate is mapped to the noteís pan position, and itís depth

is mapped to the noteís amplitude. Via the GUI, a variety of musical parameters

can be defined: instrument types, rhythm, tempo, scale, pitch range, chord

progression, etc. These parameters can be chosen in order to mimic particular

musical genres such as classic, rock, jazz, ethnic, and so on.

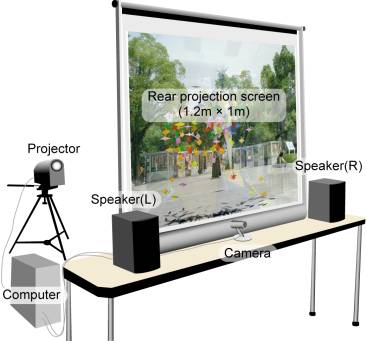

3. Configuration

This installation requires a Macintosh computer (G4 or G5 CPU) which runs

the MacOS X operating system and a web camera. Therefore, this installation

runs on any of the currently available Macintosh computers. In order to have

the installation respond acceptably quick to interaction, screen resolution

should be reduced to 320 x 240 pixels on slower machines (below 600 MHz).

Powerful machines such as a 2.5GHz dual G5 machine will easily display more

than 512 agents at a resolution of 640 by 480 pixels. Figure 1 shows a typical

exhibition configuration such as the one that will be used at the GA conference

2005.

Figure 1. The hardware configuration

for an exhibition scale installation.

References

[1] Unemi, T. and Bisig, D. : Playing Music by Conducting BOID Agents - A Style of Interaction in the Life with A-Life, Proceedings of A-Life IX, pp. 546-550, 2004.

[2] Reynolds, C. W. : Flocks, herds, and schools: A distributed behavioral model, Computer Graphics, 21(4):25--34, (SIGGRAPH '87 Conference Proceedings) 1987.