Evolving

the Shape of Things to Come: A Comparison of Direct Manipulation and

Interactive Evolutionary Design

Andreas Lund

Interactive Institute, Tools for Creativity and

Department of Informatics, Umeå University.

e-mail: andreas.lund@interactiveinstitute.se

Abstract

This paper is

concerned with differences between direct manipulation and interactive

evolutionary design as two fundamentally different interaction styles for

creative tasks. In order to empirically compare the two interaction styles, two

prototypes for typeface design were designed and implemented. An experimental

evaluation was carried out involving both prototypes and two different kinds of

task, one where the goal was clearly defined and one where the subjects had to

formulate the goal themselves. An analysis of the results suggests that

participants of the experiment experienced the direct manipulation prototype to

offer a higher degree of ability to affect the design of typefaces, compared to

interactive evolutionary design. Subjects also experienced themselves to be

more active while using the direct manipulation interface. The interactive

evolutionary design prototype was reported to being most suited for creative

tasks, while the direct manipulation prototype was experienced to be more

suitable for tasks where the goal is clearly defined.

1. Introduction

To varying

degree, different artifacts may be described in terms of themes and variations

on themes. The figure below shows the letter ‘A’ in four different typefaces,

each one unique in its own right. Still, each typeface has a lot of features in

common that make them similar in many respects. Put differently, the typefaces

may be understood as different variations on the same theme.

![]()

Given the

notions of theme and variation on theme, design may – partly – be conceived as

an activity that includes exploration of a theme in search for pleasing

variations. In this example and in this paper, the object of design is

typefaces but could be something else, sunglasses, china, human-computer

interfaces or other kinds of objects. On a concrete level, the form of an

artifact may be described in terms of its absolute characteristics. For

instance, a specific variation on a typeface theme may have a certain weight,

width and other measures that define the exact form of the letters. On an

abstract level, the form may be described in terms of potential

characteristics, that is, a description that allows for a multitude of concrete

variations, each with its own unique form and qualities. Thus, an abstract

description of an artifact is rather a description of a design space, a set of

potential artifacts, a set of potential variations on a theme.

Now, as

suggested by Ben Shneiderman [14], a challenge for designers of user interfaces

and human-computer interaction researchers is to design and develop information

technology that supports creativity. This paper reports on a project where I

have tried to take on that challenge. The project aims at comparing different interaction

styles that can be used to support creative exploration of design spaces. In

this paper, I am concerned with two fundamentally different ways of

interactively exploring a typeface design space: direct manipulation and

interactive evolutionary design. However, it is not the typographical aspects

of this design space that are at the center of this paper. Rather, my main

interest is the knowledge and understanding of users’ experiences from using

different modes of interaction in the exploration of design spaces. More

specifically, I am interested in (1) how users experience their ability to

affect the object of design while using the different interaction styles, (2)

the level of experienced activity, (3) whether they had fun using the

prototypes and (4) if users think that one interaction style is more

appropriate than the other for a specific kind of task.

The outline of

the paper is as follows. First, I present what I take to be important aspects

of the two interaction styles discussed in this paper: direct manipulation and

interactive evolutionary design. Second, the design of two software prototypes

is presented followed by a presentation of an experimental evaluation that was

conducted as part of the project. Finally, the results of the evaluation are.

2. Direct Manipulation

Direct

manipulation interfaces, a term coined by Ben Shneiderman [12] in the

mid-seventies, are the kind of interface that is characteristic of most modern

personal computer application user interfaces. Typically, direct manipulation

interfaces incorporate a model of a context (such as a desktop environment)

supposedly familiar to users. Rather than giving textual commands (i.e.

"remove file.txt", "copy file1.txt file2.txt") to an

imagined intermediary between the user and the computer, the user acts directly

on the objects of interest to complete a task. More precisely, crucial features

of direct manipulation interfaces include [13] continuous and perceivable

representations of the objects of interest, physical actions instead of

complicated syntax and incremental actions that are rapid and reversible.

Undoubtedly,

direct manipulation has played an important role in making computers accessible

to non-computer experts. Less certain are the reasons why direct manipulation

interfaces are so successful. It has been suggested [5] that this kind of

interaction style caters for a sense of directness, control and engagement in

the interaction with the computer. The possibilities of incremental action with

continuous feedback are believed to be an important factor of the

attractiveness of direct manipulation. Not least importantly, interaction with

direct manipulation systems is often experienced as enjoyable [13]. However,

direct manipulation is also associated with some problems that make it a less

than ideal interaction style in some situations. Recently new interaction

styles have emerged that address the shortcomings of direct manipulation in

various ways. One example are so-called software agents [8] that, quite the

contrary to direct manipulation, act on behalf of the user and alleviate the

user from some of the attention and cognitive load traditionally involved in

the interaction with large quantities of information. However, this relief

comes at the cost of lost user control and requires the user to put trust into

a pseudo-autonomous piece of software.

3. Interactive

Evolutionary Design

Another emerging

style of human-computer interaction of special interest for creative tasks is

that of interactive evolutionary design (sometimes referred to as aesthetic

selection). Interactive evolutionary design is inspired by notions from

Darwinian evolution and may be described as a way of exploring a large –

potentially infinite – space of possible design configurations based on the

judgment of the user. This approach hereafter referred to as interactive

evolutionary design, typically involves the use of genetic algorithms [9] in combination with a human evaluator.

Rather than, as

is the case with direct manipulation, directly influencing the features of an

object, the user influences the design by means of expressing her judgment of

design examples. Each design example can be thought of as consisting of two

different representations, a genotype

and a phenotype. The phenotypical

representation is a concrete and perceivable rendition of an object that the

user evaluates. The genotypical representation is an encoded description of the

traits that a specific object in the design space has. The genotypical

representation can be implemented in many different ways, but the simplest kind

is a string of binary digits with fixed length. Examples judged positively by

the users are given an increased possibility of being selected by the genetic

algorithm to carry their traits to a new generation of design examples by means

of a reproduction process. The

reproduction process typically involves some form of crossover, that is, the

offspring’s traits are a combination of traits from the parents. The offspring

may also be subject to mutation in order to add an amount of random change to

the offspring. The offspring that constitute the new generation is, like the

first generation, subject to the scrutiny of the user. In an iterative fashion,

new examples are produced based on the selections made by the user and – hopefully

– each new generation contains examples that converge towards configurations

that are well adapted to the preferences of the user.

Variations of

interactive evolutionary design have been employed to support design and creation

of a variety of objects. For instance, Karl Sims [15] has applied interactive

evolutionary techniques to evolve artistic 2D images and 3D plant structures.

Another well know example of artistic work is that of Todd and Latham [17] who

developed Mutator, a program that

supports exploration of 3D form. An interesting, non-artistic application of

interactive evolutionary design is the work by Gatarski [4] who applied

interactive evolution to design web-advertising banners. Much related to this

paper is the parametric font definition that allows for breeding of typefaces,

by Ian Butterfield and Matthew Lewis [3]. Matthew Lewis maintains a web site

[7] with links to papers and other resources related to interactive

evolutionary design. Peter J. Bentley’s book Evolutionary Design [2] gives an

excellent overview of evolutionary approaches to design, both interactive and

non-interactive.

A striking

difference between direct manipulation and interactive evolutionary design is

that direct manipulation seems to imply an ideology that a user should at all

times be in control of what is going on at the user interface. Interactive

evolutionary design may be understood as an approach where the user may expect

the unexpected, at the cost of giving up some amount of control. In the context

of creative tasks, this aspect may add an important dimension to human-computer

interaction that direct manipulation cannot provide. It seems plausible that

many people do not generally exhibit the skills, training and experience or do

not have the time required for advanced design work. In this respect,

interactive evolutionary design is very promising considering that most people

are competent at expressing likes and dislikes about their physical and social

environments, that is, they have a skill for judgment. Interactive evolutionary

design may be understood as a way to capitalize on that competence to make

design processes accessible a wider audience.

4. Prototype Design

In the following

three subsections the prototypes used in the experiment is presented.

4.1 A Typeface design space

The design of

the two prototypes have been guided by the principle that they should be as

similar as possible to each other in all respects, except those features that

concern the style of interaction. One important common feature of the

prototypes is the notion of a typeface design space, that is, the space of

possible typefaces that can be explored by the prototypes. The design space is

organized by a number of parameters (seven in the current implementation) and

constitutes an abstract parameterized typeface. The parameterized typeface

includes a description of the shape of each glyph. However, these descriptions

are not formulated in terms of absolute values, but in terms of parameters.

Thus, the design of a specific typeface in the design space may be conceived of

as a kind collaborative design with contributions from both the designer of the

abstract parameterized typeface and from the user/designer that decides on the

values for the parameters.

The design of

each glyph in this typeface is partly governed by one or more of the seven

parameters. The overall design is deliberately made in a way that makes it

possible to have relatively few parameters with application to as many glyphs

as possible. In other words, I have tried to avoid a design space where a

parameter is of relevance only to one or very few glyphs. Rather, a change of

one parameter should propagate through out the whole typeface.

In the table

below the effect of the different parameters is illustrated.

|

Description |

Illustration |

|

Vertical bar width |

|

|

Horizontal bar width |

|

|

Outer roundness |

|

|

Inner roundness |

|

|

Width |

|

|

Lower case horizontal

scale |

|

|

Lower case vertical

scale |

|

The idea of a parametric

typeface is by no means new. A well-known example is Donald E. Knuth’s meta-font. A meta-font is, as defined by

Knuth, a “schematic description of how to draw a family of fonts” [6]. Such a

description does not define one single font but rather a set of potential

fonts. Another example of parametric fonts is the multiple masters font technology from Adobe, Inc (see for instance

[16]). A multiple master font is parametric in the sense that it includes two

or more glyph outlines. These outlines confine a design space of potential

fonts that are realized by means of interpolation between the included

outlines.

In this paper

the parametric fonts themselves are not the core issue. However, they are an

interesting domain of design that gives rise to challenges concerning the tools

and methods for exploring very large design spaces. Knuth expresses the

richness of parametric designs in the following way [6, p. 292]:

So many variations are possible, in fact, that the

author keeps finding new settings of the parameters that give surprisingly

attractive effects not anticipated in the original design; the parameters that

give the most readability and visual appeal may never be found since there are

infinitely many possibilities.

Knuth’s remark

concerns parametric typefaces, but could most likely be extrapolated to account

for exploration of parametric design spaces in general and motivates efforts

aiming at investigating how to better support and understand design space

exploration. In the following sections I will present the design of two

prototypes that each embodies a distinct style of supporting interactive

exploration of such spaces.

4.2 Direct manipulation prototype

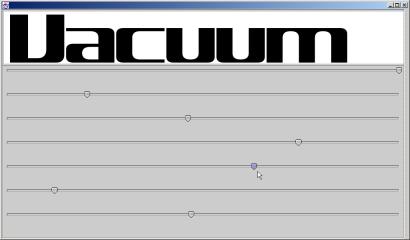

As is the case

for both prototypes, the intention has been to keep the design as clean as possible

and to include only that which is essential for each interaction style. Below

is an image of the version of the direct manipulation prototype that was used

in the experimental evaluation.

As seen in the

picture, the user interface contains only two kinds of elements: a typeface

display and a number of sliders. Each slider corresponds to one of the seven

design space parameters described in the previous section. A user of this

prototype can navigate through the typeface design space by dragging the slider

handles to the right and left. As the user drags a slider, the visual display

is continuously updated.

Other version of

this prototype contains some features that are left out in the version

described here. For instance, the possibility to save the typeface as an

encapsulated postscript (eps) is not included. Also left out from this version,

are slider labels with the name of the different parameters. The labels were

considered to be included, but I decided to leave them out under the assumption

that the meaning of the textual labels could just as well be inferred the

feedback of slider movement. However, the soundness of this decision is by no

means obvious and could very well motivate an experiment on its own.

4.3 Interactive evolutionary design prototype

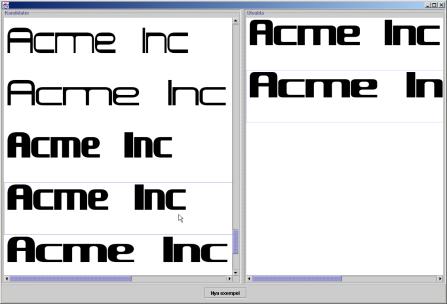

The interactive

evolutionary design prototype has exactly the same design space as the direct

manipulation prototype, but offers a very different way of exploring that

space. The image below shows a screen shot of the user interface.

The list on the

left side of the window contains typeface examples generated by the program. In

the version used in the evaluation, the list always contained fifty examples.

This set of typefaces constitutes a generation. On a monitor with a resolution

of 1024x768, five typeface examples at a time are visible in the list. The user

selects typefaces that she or he in some way finds attractive – aesthetically

or otherwise – by double-clicking a typeface in the list of generated

typefaces. As soon as the user has selected a typeface, a copy of it appears in

the list on the right side of the window. The selected typeface is not removed

from the list to the left. Thus, a single typeface may be selected several

times. Below the two lists there is a button labeled “Nya exempel” (Swedish for

“New examples”). By clicking the button, the right list is cleared and a new

set of fifty examples appears in the left list. In this context, it may be

necessary to briefly go into some of details of the inner workings of the prototype.

The typefaces

visible in the interface may be considered as phenotypical representations of typefaces. Each typeface also has a

genotypical representation, that is,

an encoded specification of the typeface. Conceptually, the genotypical

representation of a typeface consists of a chromosome containing seven genes,

one for each design space parameter. Each gene is represented by a string of

binary digits. A chromosome can be thought of as something that identifies an

exact location in the design space, an exact description of a typeface. In

order to produce a new generation of typefaces, the genetic algorithm assigns a

probability to each typeface selected by the user so that they have equal

chance to become parents in a reproduction. The reproduction always involves

two parents and always results in two offspring. The genetic operators involved

in the reproduction of typefaces are mutation and crossover. In the particular

version of the prototype used in the evaluation, the probability for crossover and

mutation to occur is fixed and set to 0.08 and 0.7, respectively. Another

version of the prototype includes slider controls to adjust probabilities for

mutation and crossover.

5. Experiment design

In the design of

the experiment it was considered important to allow each subject to work with

both of the two prototypes and with different kinds of tasks. Two accomplish

this the experiment involved two kinds of tasks. In one kind of task – referred

to as the “copy task” – each subject were asked to copy or recreate a typeface

as displayed on a card presented to him or her during the experiment. The

displayed typefaces were generated from the same design space as the subjects

explored in the experiment. All the subjects performed this task with both. The

purpose of this task was to mimic a situation where the goal is explicit and

clearly defined. The second kind of task – referred to as the “creative task” –

required more creativity on part of the experiment participant as this task did

not involve recreating what some else had already designed. In this kind of

task, subjects were asked to design a textual logotype for a company. In the

fictitious scenarios presented to the participants the logotype should appear

on the products and in marketing material of by the company. The purpose of

this kind of task was to trigger a creative process to be able to assess how

the different prototypes supported that process.

The experiment

involved 16 subjects, eight women and eight men. Each subject used both

prototypes and performed both kinds of tasks with each prototype. The duration

of a typical experiment session was on average 30 minutes. During each session,

subjects were asked to think aloud to give a verbal account of their

interaction with the prototype. These verbal accounts were recorded and

transcribed afterwards. The subjects were also asked to answer ten questions in

a questionnaire.

6. Analysis

6.1 Experienced ability to affect design

As mentioned

earlier in this paper, direct manipulation is known to cater for a strong sense

of directness and control, leaving the user in charge of the object of

manipulation. Intuitively, interactive evolutionary design seems to be a more

indirect way of interacting with computers. Two questions in the questionnaire

concerned the degree to which the users experienced that they could affect the

design of typefaces using the two different prototypes:

Q1:

To what degree do you experience that you can affect the design of the

typefaces using tool A (direct manipulation)

Q2: To what degree do you experience that you can affect the design of the

typefaces using tool B (interactive evolutionary design)

Prior to

analyzing the results of these two questions I suspected that the users taking

part in the evaluation would report that they experienced the direct

manipulation prototype to offer a higher degree of ability to affect the design

of typefaces, compared to interactive evolutionary design. Just by looking at

the scale and the different means in the table of descriptive statistics below

seem to confirm that intuition.

In order to

establish if this difference is a statistically significant difference, a

paired t-test was carried out:

It turns out

that the difference is very highly significant which more than well confirms

the initial assumption about how the subjects would experience the different

tools’ ability to cater for a sense of being able to affect the design of the

typefaces.

6.2 Experienced level of activity

Direct

manipulation is – almost by definition

– a very active mode of interaction. Indeed, the requirement to be

active in order for the computer to be reactive is something that sometimes

motivates alternative interaction styles, such as software agents. My initial

intuitions about interactive evolutionary design were that it would be

experienced as a comparatively passive mode of interaction in the context of

typeface design. The users were given the following two questions:

Q3: Were you active or passive

while using tool B (interactive evolutionary design)?

Q4: Were you active or passive while using tool A (direct manipulation)?

As shown on the

scale and in the table below, the mean for the level of experienced activity

using the direct manipulation interface is very high (and with a fairly low

standard deviation). This may not be very surprising. However, I would have

expected that the mean for the interactive evolutionary design interface to be

somewhat lower than reported.

A t-test reveals

that the difference of experienced activity is significant, although not to a

very great extent (p < 0.05).

It should be

mentioned that some subjects asked me about how to interpret these two

questions. On a more speculative basis, the problems of interpreting the

questions may indicate that both direct manipulation and interactive

evolutionary design involve a rather high level of activity, but different in

character. While the activity in direct manipulation typically involves the

manipulation of one single object at a time, interactive evolution involves an

active selection from a potentially large set of objects.

6.3 Experienced level of amusement

In the section

about direct manipulation in the beginning of this paper, it is observed that

direct manipulation typically is associated with a high degree of subjective

satisfaction, that is, people tend to find it pleasurable to work with direct

manipulation interfaces. I am interested in whether there are differences

between direct manipulation interfaces and interactive evolutionary design

concerning this aspect of human-computer interaction. In order to investigate

if subjects were bored or amused using the two different prototypes the

questionnaire contained the following questions:

Q5: Was it fun to use tool A?

(direct manipulation)

Q6: Was it fun to use tool B? (interactive evolutionary design)

By looking at

the means of the ratings made by the subjects it seems as if both prototypes

were experienced as being fun to use.

As shown on the

scale, it appears as if the mean for the direct manipulation prototype is

slightly higher than the mean for interactive evolutionary design. However, a

t-test reveals that this difference is not statistically significant (p >

0.05).

As a note of caution, it is not obvious that

what is being experienced to be fun in the evaluation are tools or interactions

styles themselves. It may very well be the case that the task domain, typeface

design, is the source of amusement.

6.4 Experienced predictability of decisions and actions

The some extent, the intelligibility of

interaction styles and user interfaces is determined by the degree to which

users experience some amount of rationality concerning the relation between

their actions and the computer’s response to the actions. In the questionnaire,

I tried to approach this issue by asking whether the subjects considered the

consequences of their actions and decisions to be predictable for the different

tools:

Q9: How would you describe the

consequences of your decisions and actions using tool A? (direct manipulation)

Q10: How would you describe the consequences of your decisions and actions

using tool B? (interactive evolutionary design)

If we look at

the scale it appears at first sight as if the consequences of users’ decisions

and actions are experienced to be more predictable when using the direct

manipulation interface prototype. This may not be very surprising since one of

the cornerstones of direct manipulation is small, incremental action with

continuous feedback that allows the user to see what is happening as a result

of her actions.

However, if we look at the standard deviations

for the two means they are fairly high and a t-test reveals that we cannot

conclude – with statistical significance – that there is a difference between

the two means (p>0.05). Thus, based on the data from this evaluation, the

two prototypes are experienced to be equally predictable.

6.5 For what kind of task are the different tools most suited?

As mentioned

before, each subject used both prototypes for solving two different kinds of

task. The questionnaire included two questions to capture if the subjects

experienced one kind of tool as more apt for one kind of task. The questions

were formulated as:

Q7: For what kind of task is

tool A (direct manipulation) most suited?

Q8: For what kind of task is tool B (interactive evolutionary design) most

suited?

As in all other

eight questions, the answer took the form as a marking on a continuous scale.

The extremes on the scales were “Definitely type 1” and “Definitely type 2”. Type 1 referred to the

kind of task where the user was supposed to recreate a pre-designed typeface as

displayed on a card. Type 2 referred to the kind of task where the user had to

formulate the goal on her own based on a short scenario.

In the analysis,

the answer markings on the scales were re-interpreted as signifying that a

specific tool – A or B – were experienced as being suited for either type 1

tasks or type 2 tasks. It was decided that markings on the exact middle of the

scales should be left out of the data set. However, this was never the case.

The coding resulted in a frequency table:

|

|

Task 1 |

Task 2 |

Total |

|

Direct manipulation |

13 |

3 |

16 |

|

Interactive evolution |

5 |

11 |

16 |

|

Total |

18 |

14 |

|

|

|

|

|

|

If we look at this

table, it appears as if the direct manipulation prototype was experienced by

the subjects to be more suited for the kind of task where the goal is clearly

defined and explicit, whereas the interactive evolutionary design prototype

seems to be experienced as more suited for the more creative, scenario-based

kind of task.

To test whether

the frequency distribution among the cells in the table really is not just

coincidental a chi square test was employed:

The chi square test

tells us that the difference in frequency distribution among the cells is very

highly significant. Thus, it seems as if there is a real difference concerning

the way that subjects experienced the suitability of the different prototypes.

6.6 Some observations from verbal accounts

Only so much can

be captured in a questionnaire like the one used in this evaluation. In order

to tap into the richness of users’ interaction with the prototypes I made voice

recordings during the course of interaction. From these recordings I have made

some observations that I find interesting.

Mental modeling

When the

participants of the evaluation used the direct manipulation prototype the

typical behavior was of an explorative kind. They tried to make sense of the

interface by dragging the different sliders to the left and right just to see

what happened. Some were very careful while others, rather violently, shoved

the sliders around between the extremes. Based on that observation, the

participants did not seem to have problems to make sense of what was going on

at the user interface.

Quite the

contrary to the direct manipulation interface, the interactive evolutionary

design prototype seemed to be more of a challenge concerning the meaning

attributed to the interface and the hidden mechanisms behind it. It seems as if

the subjects in a very active way during the course of interaction tried to

develop mental models [1, 10] of the system, that is, they tried to develop

theories that could explain the behavior of the program they were using.

Notably, some subjects tried to figure out the relation between the typefaces

they selected and the new typefaces that were generated from the selection.

Some subjects seemed to try to make sense of this relation as a kind of average

relation. The following quotation shows how one subject made this very explicit

(translated from Swedish):

Well, you do not really know how he [the computer]

does it, you don’t know if it pays off to take one extreme at one end and one

extreme at the other end and believe it will become something in between. I

don’t know.

Other subjects

used a vocabulary that included words such as “mixing”, “blending” and other

words that I take to imply an understanding of the generative mechanism as an

averaging of parameters, not a recombination. Also of interest is that some

subjects seemed to attribute meaning to the order in which newly generated

typefaces were presented in the list. However, the program is not designed to

do any kind of sorting. Nonetheless, some subjects seemed to experience the

typefaces at the top of the list as having more in common with the ones they

selected from the previous generation of typefaces. As a response to my

question, if he could recognize the qualities of the selected typefaces in the

generated set, one subject responded:

Several of the first ones are variations of the two I

selected, until you come down here [the person scrolls down the list]. I would

say that it varies so much that I can’t say that [there is a resemblance with

the two previously selected].

It is not

obvious how to assess the significance of this kind of interpretation of the

interface, but it seems likely that it may affect the outcome of that which is

being designed. If objects at the end of the list are experienced to be of less

of interest users may tend not to select them.

Convergence and surprise

As mentioned

previously, the results from the questionnaire seem to point in the direction

that interactive evolutionary design is more preferable for tasks where the

goal in not clearly and explicitly defined. Partly, a reason for that may be

that interactive evolutionary design is based on the presentation of examples,

the creator is given something to react to. Indeed, the whole point of this

approach is to use these reactions as a driving force in design processes.

However, one crucial aspect seems to be to strike a balance between how this

force is used to cater for convergence of the design and the degree of

surprise. On the one hand, user reactions to design examples must be handled in

a way that adapts, in this case, the typeface design to the expressed user

preferences. On the other hand, a supportive system should also allow for some

amount of surprise and unexpected designs. If this balance is found, it may be

possible to achieve a dynamic relation between the user’s preferences and the

behavior of the computer program. Put differently, if such a balance is found,

interactive evolutionary design may not only be used as a tool to externalize a

mental vision of that which is being designed, but also change such visions, by

means of presenting the unexpected.

While observing

the participants of the evaluation it appeared as if some of them experienced

both a high level of convergence and some amount of surprise. For instance,

when one of the participants used the interactive evolutionary design prototype

to design the Caveman logotype he suddenly noticed a generated typeface and

expressed:

Wow! This one felt very much like the Godfather [the movie]! That’s

cool. I’ll take the godfather.

At the end he

selected a “Godfather”-like typeface as the typeface for the logotype. Other

participants expressed similar experiences that pointed in the direction that

they could recognize their selections in the generated typefaces at the same

time as some typefaces were very unexpected. Some reacted quite strongly when

they saw something that had not expected and that kind of reaction was rare for

the direct manipulation interface, probably due to the fact that direct

manipulation – as implemented in my prototype – makes it possible for users to

continuously see what is happening while changing one parameter at a time. The

balance between convergence and surprise is of course heavily dependent on the

probability for mutation. Initially, I considered allowing the participants to

vary the mutation rate as they liked but decided that it would make a

comparison of user experiences almost impossible, considering that mutation

rate affects the outcome very dramatically.

7. Concluding discussion

The empirical

investigation suggests that the participants clearly experienced that the

direct manipulation interface provided better support for affecting the design

of the typefaces. However, from my observations of the experiment sessions,

interactive evolutionary design appears to have supported creativity in a way

that direct manipulation did not. This is also confirmed by the participants’

inclination to experience interactive evolution as more suitable for creative

tasks. In this context, it is interesting that the experienced activity level

was significantly lower for interactive evolutionary design prototype compared

to direct manipulation. In a way, it may be interpreted as if we get more by

doing less.

It is an open

issue to what extent we can generalize from the results of this investigation

to other kinds of design spaces and other contexts. However, it seems to me as

if one lesson to learn from the investigation is that the important issue is

not what interaction style is better than the other. Rather, I would suggest

that a multitude of different modes of interaction, including direct

manipulation and evolutionary approaches, should be combined to cater for the

need imposed by situation and individual preferences.

8. References

1.

Allen,

Robert B. (1997) Mental Models and User Models. In M. Helander, T. K. Landauer,

P. Prabhu (eds.). Handbook of Human-Computer

Interaction.

Elsevier Science B. V.

2.

Bentley,

Peter J. (1999). Evolutionary Design by

Computers. Morgan Kaufmann.

3.

Butterfield,

I. And Lewis, M. (2000). Web-page: Evolving Fonts.

http://www.cgrg.ohio-state.edu/~mlewis/AED/Fonts/

4.

Gatarski,

Rikard. (1999). Evolutionary Banners: an Experiment with Automated Advertising

Design. In Proceedings of COTIM-1999.

Rhode Island, RI, USA, September 26-29.

5.

Hutchins,

E. L., Hollan, J. D. and Norman, D. A. (1986). Direct manipulation interfaces.

In D. A. Norman and S. W. Draper (Eds.) User

centered system design, 87-124. Hillside, NJ: Lawrence Erlbaum Associates,

Inc.

6.

Knuth,

D. E. (1999). Digital Typography.

CSLI Publications. Stanford. Ca.

7.

Lewis,

M. (2000). Web page: Visual Aesthetic Evolutionary Design Links.

http://www.cgrg.ohio-state.edu/~mlewis/aed.html.

8.

Maes,

Patti. (1994). Agents that reduce work and information overload. In Communications of the ACM. Vol. 37, No.

7 (pp. 31-40, 146).

9.

Mitchell,

Melanie. (1996). An introduction to

genetic algorithms. The MIT Press.

10. Norman, D. A. (1988). The Psychology of Everyday Things. New

York: Basic Books.

11. Shneiderman, Ben. (1977). The future

of interactive systems and the emergence of direct manipulation. In Behaviour and Information Technology. Vol 1, 237-256.

12. Shneiderman, Ben. (1982). The future of interactive systems and

the emergence of direct manipulation. In Behaviour

and Information Technology. Vol 1, 237-256.

13. Shneiderman, Ben. (1997). Direct Manipulation for Comprehensible, Predictable, and Controllable User Interfaces. In Proceedings of IUI97, 1997 International Conference on Intelligent User Interfaces. Orlando, FL, January 6-9, 1997, 33-39.

14. Shneiderman, Ben. (2000). Creating

Creativity: User Interfaces for Supporting Innovation. In ACM Transactions on Computer-Human Interaction, Vol. 7, No. 1,

March.

15. Sims, K. Artificial Evolution for

Computer Graphics. In Computer Graphics, 25 (1991) 319-328.

16. Tinkel, K. (1995). Mastering

multiple masters. In Adobe Magazine,

November.

17. Todd, S. and Latham, W (1999). The

Mutation and Growth of Art by Computers. In Bentley, Peter J., Evolutionary Design by Computers. Morgan

Kaufmann.